How do we build AI that is not only powerful but also verifiably trustworthy? AI that follows our institutional values, not someone else’s? AI we can audit when things go wrong?

For leaders, ethicists, and engineers navigating the deployment of artificial intelligence, these questions define the central challenge of our time: moving beyond blind faith in a “black box” to a model of provable integrity.

The Self-Alignment Framework provides a complete answer, operating on two distinct but interconnected levels: the philosophical and the practical.

The Philosophical Foundation: SAF

The Self-Alignment Framework (SAF) is a universal model of ethical reasoning, a timeless blueprint for how any intelligent agent, whether human or artificial, can achieve and maintain alignment with a defined set of values.

Rooted in over two millennia of Western philosophical inquiry, from Plato’s tripartite soul to Aristotelian virtue ethics, from Augustine’s theory of Will to Kant’s moral reasoning, SAF defines the essential faculties of ethical cognition: the Intellect (to reason and propose), the Will (to decide and enforce), the Conscience (to audit and judge), and the Spirit (to learn and maintain character over time).

SAF provides the why, the deep, human-centric principles that give AI governance its meaning and legitimacy.

The Technical Implementation: SAFi

The Self-Alignment Framework Interface (SAFi) is what emerges when you take these timeless principles and make them computable, auditable, and deployable at scale. It is an open-source governance engine that transforms any Large Language Model from a generic tool into a verifiably aligned agent.

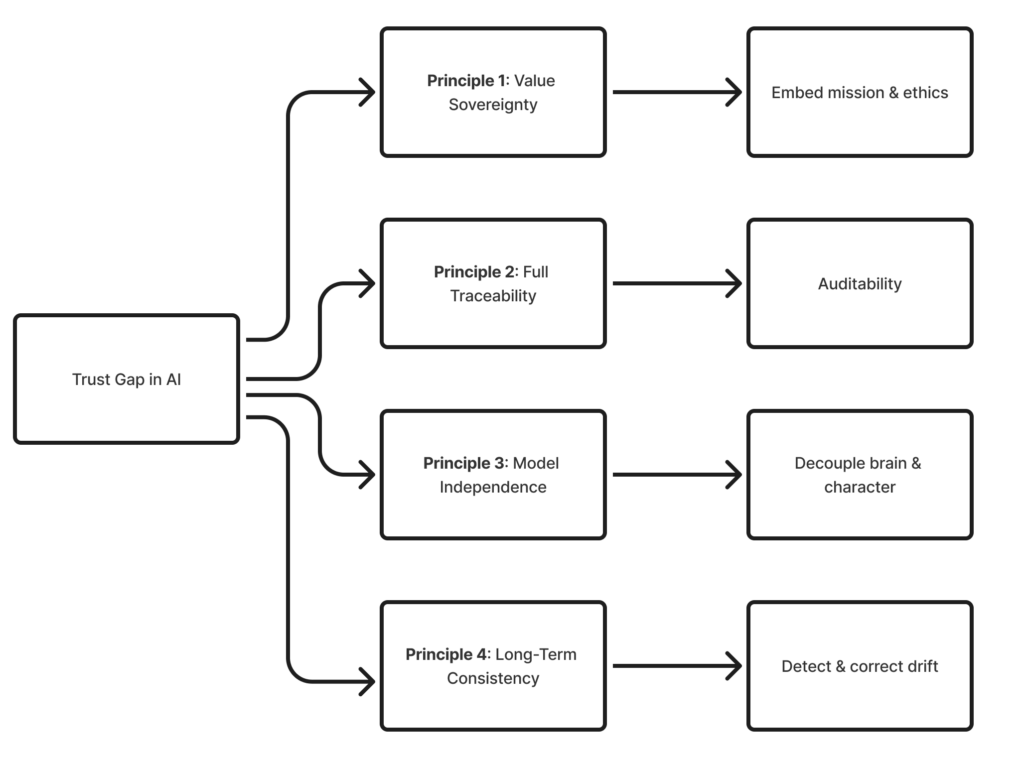

SAFi provides the how, the concrete, production-ready architecture that puts philosophy to work, solving the most critical problems in AI safety and organizational strategy through four core principles:

Value Sovereignty

An organization has the ultimate authority and capability to embed and enforce its own unique mission and values in the AI systems it deploys. No vendor lock-in. No imposed ethics. Your institution, your values, faithfully executed.

Full Traceability

Every AI decision is transparent and auditable, solving the “black box” problem by creating a verifiable record of reasoning. When things go wrong, you know exactly what happened and why.

Model Independence

The architectural freedom to separate the AI’s governance layer (its “character”) from its underlying generative engine (its “brain”). Swap models as technology evolves without rebuilding your entire ethical framework.

Long-Term Consistency

The capability of an AI to maintain its core identity and adhere to its mission over time, using stateful memory to detect and correct for “ethical drift.” Not just rule enforcement, but genuine character formation.

Why should you care about this framework?

We stand at a crossroads. One path leads to AI systems where ethics are proprietary, opaque, and controlled by a handful of corporations, where every organization must accept someone else’s values baked into the technology itself.

The other path leads to AI as neutral infrastructure: transparent, auditable, and governed by legitimate democratic institutions and organizational sovereignty. Where technology serves values rather than imposing them.

SAF and SAFi chart this second path.

Open by Design

SAF (the philosophical framework) is available under the MIT license—free for anyone to study, adopt, or build upon.

SAFi (the implementation framework) is licensed under GPL-3, ensuring that this governance infrastructure remains open, that improvements flow back to the community, and that no entity can privatize what should remain a public good.

Because the question of who controls AI’s values is too important to be left to proprietary systems and corporate alignment.