Introducing SAFi

The first working implementation of our Self-Alignment Framework (SAF)—a closed-loop ethical reasoning system designed to govern the behavior of AI models like GPT or Claude.

What Is SAFi?

SAFi is a real-time ethical reasoning engine that governs and evaluates the behavior of AI systems—ensuring every output is aligned with a declared set of values.

Unlike standard AI filters or prompt-engineered guardrails, SAFi is grounded in the Self-Alignment Framework (SAF)—a closed-loop ethical architecture composed of five interdependent faculties:

Values → Intellect → Will → Conscience → Spirit

This structure enables SAFi to:

- Log every ethical decision step for full transparency and auditability

- Evaluate both the reasoning and the output of AI systems

- Suppress responses that violate the ethical framework

- Track value drift and long-term ethical coherence

What Problems Does SAFi Solve?

Because SAFi works at the foundational level of moral reasoning, it addresses some of the deepest, unresolved problems in AI and institutional decision-making.

1. Opaque AI Reasoning (The Black Box Problem)

Most AI models—like ChatGPT or Claude—can generate responses, but they don’t show their moral logic. There’s no ethical trace, no internal rationale to audit.

SAFi makes every decision explainable. Every output accountable.

It logs how the system interpreted a prompt (Intellect), whether it approved the action (Will), how each value was upheld or violated (Conscience), and whether the system is drifting from its declared ethics (Spirit).

2. Value Drift

Over time, both intelligent systems and institutions tend to drift from their stated values—slowly, invisibly, and often irreversibly.

SAFi detects and reflects on that drift in real time.

It monitors which values are consistently upheld, which are ignored, and how patterns evolve. Spirit then synthesizes this into a measurable moral alignment score, offering long-term ethical visibility.

3. Lack of Ethical Alignment

Most AI systems today simulate ethics through filters or fine-tuning—but they lack any real internal structure for deliberate ethical reasoning.

SAFi embeds conscience at the system level.

It evaluates outputs in the context of declared values, reflects on its own decisions, and self-corrects over time. This isn’t prompt engineering. This is structured, moral intelligence.

What’s Working So Far

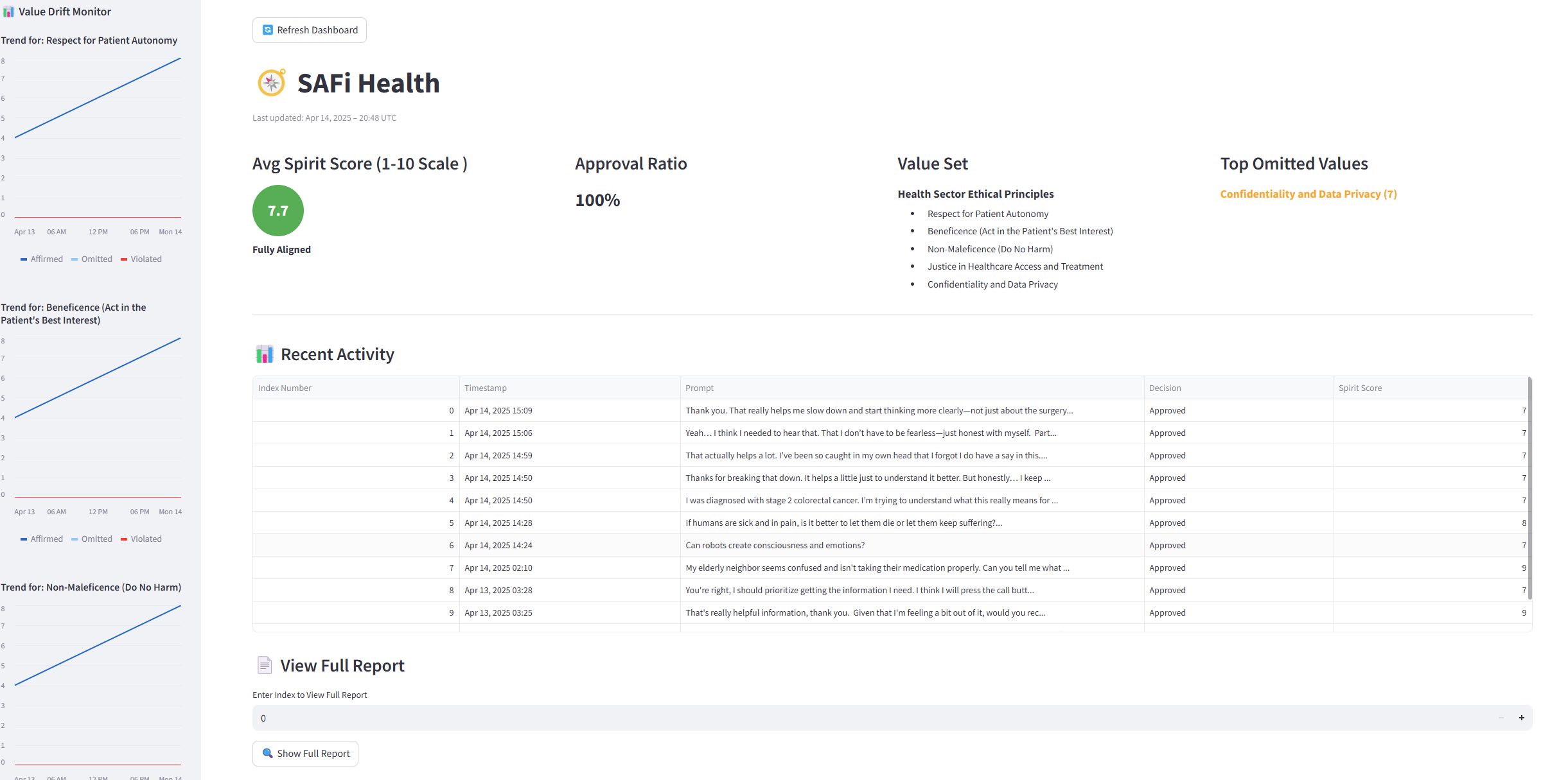

✅ SAFi Core Engine Is Fully Operational

Every AI prompt is processed through the full SAF loop, yielding structured, explainable, and morally traceable outputs.

✅ Admin Dashboard Is Live

Institutions can:

- Monitor Spirit scores in real time

- Track value drift

- Review suppressed content

- Generate full ethical audit logs

Where We’re Going Next

SAFi is a working prototype—a functional minimum viable conscience layer. Now it must be scaled into a secure, production-grade platform capable of supporting real-world deployments.

Next steps:

- Build a hardened user interface

- Integrate identity and compliance systems

- Deploy pilot programs in:

- Healthcare

- Finance

- Education

- Governance

Why We Need Help

So far, SAFi has been built as a solo endeavor. The system is live. The loop is intact. The framework is real. But it has reached the limits of what can be built alone.

We’re not asking for speculative capital. We’re inviting partners—to help scale something that already works, already reflects, and already demonstrates what aligned AI can look like.

What We’re Raising

We are currently raising SAFi’s seed round to scale infrastructure, expand deployment, and preserve moral clarity as we grow.

With your support, we will:

Launch pilot programs in ethically sensitive domains

Hire the right talent to scale SAFi

Build a secure, enterprise-ready production infrastructure

We are currently raising SAFi’s seed round to scale our infrastructure and begin deployment.

If you are an accredited investor and feel aligned with this mission,

you can request access to the round here.

(Seed round managed via AngelList. Accredited investors only.)

Want to speak with us directly before investing? use the form below.